- #Sql server deadlock kaleo workflow update

- #Sql server deadlock kaleo workflow full

- #Sql server deadlock kaleo workflow code

#Sql server deadlock kaleo workflow code

The partial got me not only the code that caused the issue, but what called that code.

#Sql server deadlock kaleo workflow full

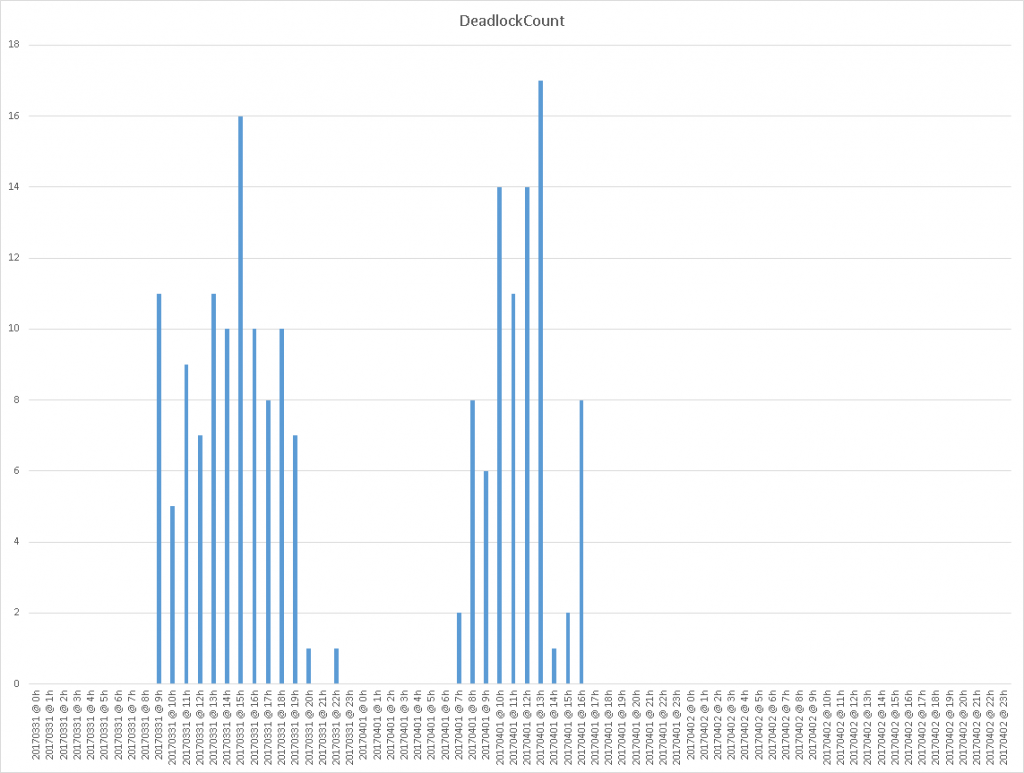

I've found some partial SQL, but not Full SQL as you mention. Yes, SQL Sentry did provide us with the way to track down what was causing the deadlocks and whats locking what and waiting for what and who got killed to let the other through. Feel free to contact us at support with any questions. All of this is provided without any need to change any trace flags. Additionally you can double click on any deadlock you see in the Event Manager calendar view to jump right to this information. SQL Sentry provides a complete graphical representation of all deadlocks with a grid display listing all nodes organized by resource, owners and waiters, and includes the full SQL for all nodes from the Deadlocks tab in Performance Advisor. I'm with SQL Sentry and noticed your post. Input Buf: Language Event: int bit)SET NOCOUNT OFF INSER SPID: 145 ECID: 0 Statement Type: INSERT Line #: 191 Input Buf: Language Event: int)SET NOCOUNT OFF UPDATE. SPID: 66 ECID: 0 Statement Type: INSERT Line #: 191 I hope it has all of the information:ĭeadlock encountered. Here is what Redgates SQL Response parses out from the error log. It seems to be a wall of text, we were having 20+ per hour and each is multiple lines. I'm having trouble getting this from the error log. I'm looking at two sql products for monitoring a server right now (Redgate and SQL Sentry) and I'm not entirely sold on one, though I'm leaning towards Sentry at the moment. Any suggestions for tracking this down would also be a great help. If anyone has some ideas on whats causing this, I would love to hear. Those should be going to the snapshot and not causing any locking issues with the table. The READ_COMMITTED_SNAPSHOT should handle the read part.

#Sql server deadlock kaleo workflow update

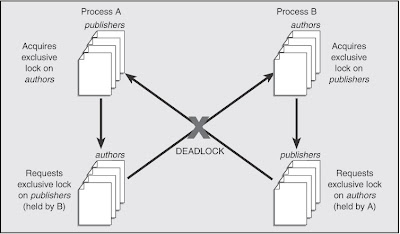

I understand it acts like 2 in many ways, but this table doesn't update or delete, just insert and read. The only thing that's really looking any fishy is the readcommited(2) because we use READ_COMMITTED_SNAPSHOT. I do a lot more to other tables (deletes, updates, inserts, reads) and they'll get slow, but not deadlock. I see whats happening, but I don't see why they're not just queuing up like a good table would do. Wait Time: The loser has a wait time of 1953

Mode: both are exclusive lock on the table for the insert

Some basic stats (From SQL Sentry Console): This isn't a one off fluke, maybe 25% of our deadlocks. Two inserts into the same history table, whose transactions start about 5 seconds apart from each other deadlocked. Getting a lot of deadlocks recently (starting maybe a month back) and this seems to be the most perplexing to me.

0 kommentar(er)

0 kommentar(er)